Agent-Based Evolutionary Dynamics in 1 population

Abed-1pop is a modeling framework designed to simulate the evolution of a population of agents who play a symmetric 2-player game and, from time to time, are given the opportunity to revise their strategy

How to install Abed-1pop

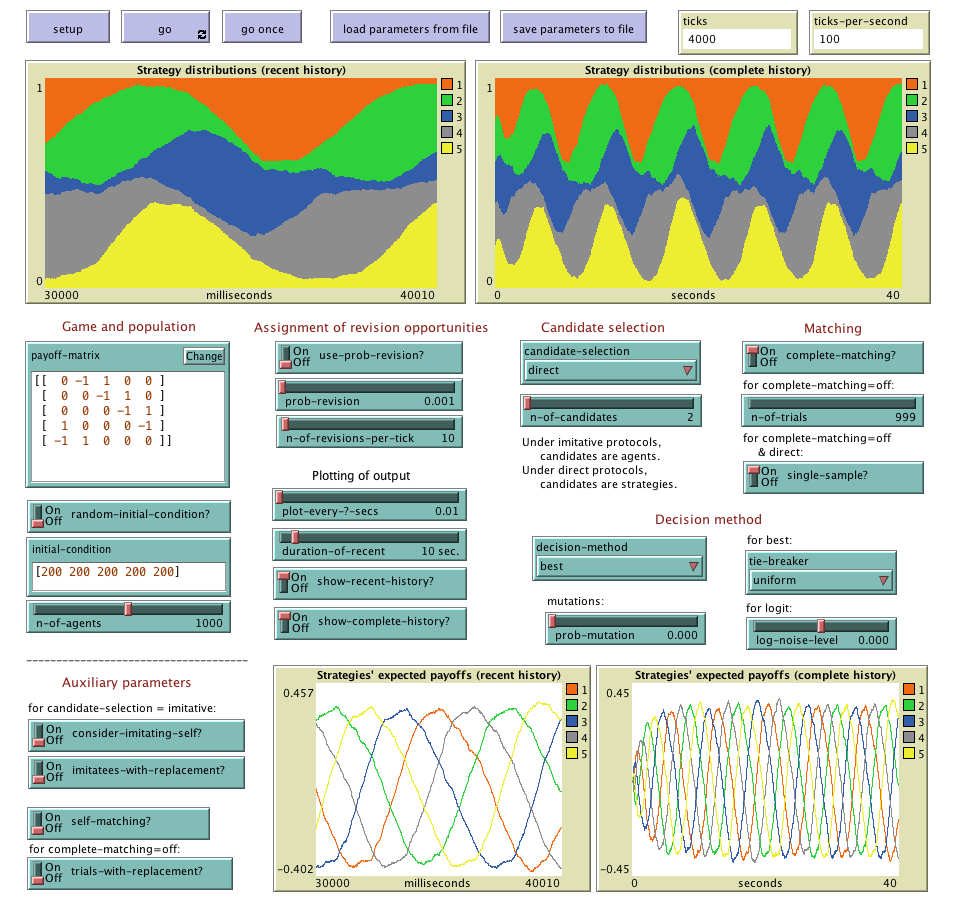

To use Abed-1pop, you will have to install NetLogo (free and open source) 6.0.1 or higher and download this zip file. The zip file contains the model itself (abed-1pop.nlogo) and also this very webpage (which contains a description of the model). To run the model, you just have to click on the file abed-1pop.nlogo. The figure below shows Abed-1pop's interface.

Overview

We use bold green fonts to denote

Abed-1pop is a modeling framework designed to simulate the evolution of a population of

The simulation runs in discrete time-steps called ticks. Within each tick:

- The set of revising agents is chosen

- The game is played by the revising agents and all the agents involved in their revision

- The revising agents simultaneously update their strategies

This sequence of events, which is explained below in detail, is repetead iteratively.

Selection of revising agents

All agents are equally likely to revise their strategy in every tick. There are two ways in which the set of revising agents can be chosen:

- By setting parameter

prob-revision , which is the probability that each individual agent revises his strategy in every tick. - By setting parameter

n-of-revisions-per-tick , which is the number of randomly selected agents that will revise their strategy in every tick.

Computation of payoffs

The payoff that each agent obtains in each tick is computed as the average payoff over

Specifying how payoffs are computed

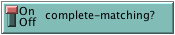

There are parameters to specify:

- the

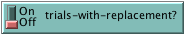

n-of-trials agents make, - whether these are conducted with or without replacement (

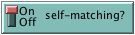

trials-with-replacement? ), and - whether agents may play with themselves or not (

self-matching? ).

Strategy revision

Agents are occasionally given the opportunity to revise their strategy. The specific protocol used by revising agents is determined mainly by three parameters:

-

candidate-selection , which indicates whether the protocol isimitative ordirect (Sandholm, 2010, p. 9), -

n-of-candidates , which determines the number of candidates (agents or strategies) that revising agents will consider to update their strategy, and -

decision-method , which determines how to single out one instance out of the set of candidates. Abed-1pop implements the following methods:best ,logit ,positive-proportional ,pairwise-difference ,linear-dissatisfaction andlinear-attraction .

We briefly explain the meaning of each of these terms below.

Imitative Protocols

Under

Imitative protocols: Specifying how to compile the set of candidate agents to copy

There are parameters to specify:

- the number of candidate agents that revising agents will consider to copy (

n-of-candidates ), - whether the selection of other candidates (besides the revising agent) is conducted with or without replacement (

imitatees-with-replacement? ), and - whether the selection of other candidates (besides the revising agent) considers the revising agent again or not (

consider-imitating-self? ).

Direct Protocols

In contrast, under

Direct protocols: Specifying how to test strategies

There are parameters to specify:

- the number of strategies that revising agents consider, including their current strategy (

n-of-candidates ), - whether the strategies in the test set are compared according to a payoff computed using one single sample of agents (i.e. the same set of agents for every strategy), or whether the payoff of each strategy in the test set is computed using a freshly drawn random sample of agents (

single-sample? ).

Decision methods

A

- one particular agent out of a set of candidates, whose strategy will be adopted by the revising agent (in

imitative protocols), or - one particular strategy out of a set of candidate strategies, which will be adopted by the revising agent (in

direct protocols).

The selection is based on the (average) payoff obtained by each of the candidates (agents or strategies) in the input set. In

-

best : The candidate with the highest payoff is selected. Ties are resolved according to the procedure indicated with parametertie-breaker . -

logit : A random weighted choice is conducted among the candidates. The weight for each candidate is e(payoff / (10 ^log-noise-level )). -

positive-proportional : A candidate is randomly selected with probabilities proportional to payoffs. Thus, payoffs should be non-negative if thisdecision-method is used. -

pairwise-difference : In this method the set of candidates contains two elements only: the one corresponding to the revising agent's own strategy (or to himself inimitative protocols) and another one. The revising agent adopts the other candidate strategy only if the other candidate's payoff is higher than his own, and he does so with probability proportional to the payoff difference. -

linear-dissatisfaction : In this method the set of candidates contains two elements only: the one corresponding to the revising agent's own strategy (or to himself inimitative protocols) and another one. The revising agent adopts the other candidate strategy with probability proportional to the difference between the maximum possible payoff and his own payoff. (The other candidate's payoff is ignored.) -

linear-attraction : In this method the set of candidates contains two elements only: the one corresponding to the revising agent's own strategy (or to himself inimitative protocols) and another one. The revising agent adopts the other candidate strategy with probability proportional to the difference between the other candidate's payoff and the minimum possible payoff. (The revising agent's payoff is ignored.)

Parameters

Parameters to set up the population and its initial strategy distribution

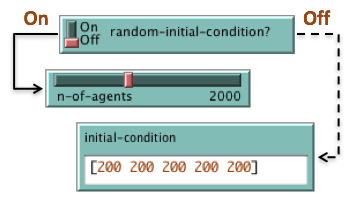

- If

random-initial-condition? isOn , the population will consist ofn-of-agents agents, each of them with a random initial strategy. - If

random-initial-condition? isOff , the population will be created from the list given asinitial-condition . Let this list be [x1 x2 ... xn]; then the initial population will consist of x1 agents playing strategy 1, x2 agents playing strategy 2, ... , and xn agents playing strategy n. The value ofn-of-agents is then set to the total number of agents in the population.

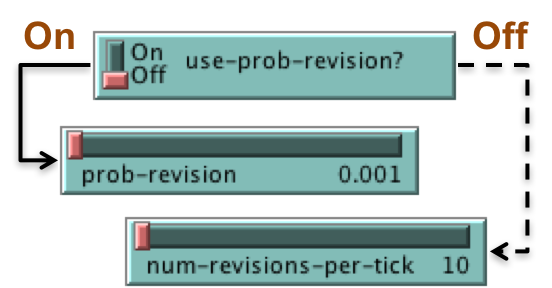

Parameters that determine when agents revise their strategy

- If

use-prob-revision? isOn , each individual agent revises his strategy with probabilityprob-revision in every tick. - If

use-prob-revision? isOff , thenn-of-revisions-per-tick agents are randomly selected to revise their strategy in every tick.

The value of these three parameters can be changed at runtime with immediate effect on the model.

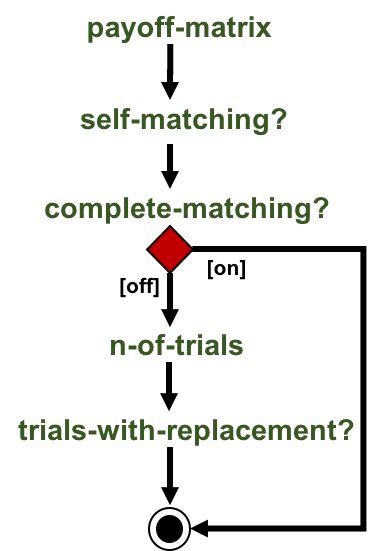

Parameters that determine how payoffs are calculated

This flowchart is a summary of all the parameters that determine how payoffs are calculated.

The idea is to start at the top of the flowchart, give a value to every parameter you encounter on your way and, at the decision nodes (i.e. the red diamonds), select the exit arrow whose [label] indicates the value of the parameter you have just chosen. Thus, note that, depending on your choices, it may not be necessary to set the value of all parameters.

Every parameter is explained below

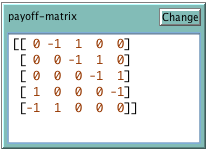

Payoff matrix for the symmetric game. Entry Aij in the matrix denotes the payoff obtained by player row when he chooses strategy i and his counterpart uses strategy j. The number of strategies is assumed to be the number of rows (and columns) in this square matrix.

- If

self-matching? isOn , agents can be matched with themselves. - If

self-matching? isOff , agents cannot be matched with themselves.

If

- Everyone, including themselves, if

self-matching? isOn . - Everyone else, if

self-matching? isOff .

If

Parameter

Parameter

We assume clever payoff evaluation (see Sandholm, 2010, section 11.4.2, pp. 419-421), i.e. when a revising agent tests a strategy s different from his current strategy, he assumes that he switches to this strategy s (so the population state changes), and then he computes the payoff he would get in this new state (i.e. he computes the actual payoff he would get if only he changed his strategy).

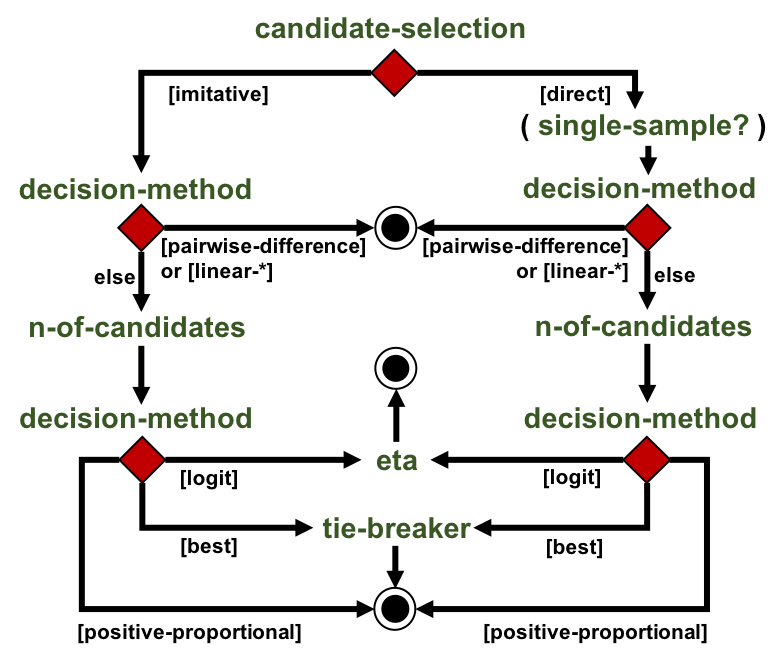

Parameters that determine how agents revise their strategy

With probability

The idea is to start at the top of the flowchart, give a value to every parameter you encounter on your way and, at the decision nodes (i.e. the red diamonds), select the exit arrow whose [label] indicates the value of the parameter you have just chosen. Every parameter is explained below.

Parameter

The multiset of candidate agents contains the revising agent (which is always included) plus a sample of (

-

imitatees-with-replacement? indicates whether the sample is taken with or without replacement. -

consider-imitating-self? indicates whether the sample is taken from the whole population (i.e. including the reviser) or from the whole population excluding the reviser. Note that, in any case, the reviser is always part of the multiset of candidate agents at least once.

The set of candidate strategies contains the revising agent's own strategy plus (

Parameter

The user can set the value of this parameter only if

Parameter

Specifically, the chosen

The candidate with the highest payoff is returned. Ties are resolved using the selected

-

stick-uniform : If the current strategy of the revising agent is in the tie, the revising agent sticks to it. Otherwise, a random choice is made using the uniform distribution. -

stick-min : If the current strategy of the revising agent is in the tie, the revising agent sticks to it. Otherwise, the strategy with the minimum number is selected. -

uniform : A random choice is made using the uniform distribution. -

min : The strategy with the minimum number is selected. -

random-walk : This tie-breaker makes use of an auxiliary random walk that is running in the background. Let N be the number of agents in Abed-1pop and let n be the number of strategies. In the auxiliary random walk, there are N rw-agents, plus a set of n so-called committed rw-agents, one for each of the n strategies in Abed-1pop. The committed rw-agents never change strategy. In each iteration of this random walk, one uncommitted rw-agent is selected at random to imitate another (committed or uncommitted) rw-agent, also chosen at random. We run one iteration of this process per tick. When there is a tie in Abed-1pop, the relative frequency of each strategy in the auxiliary random walk is used as a weight to make a random weighted selection among the tied candidates.

A random weighted choice is conducted among the candidates. The weight for each candidate is e(payoff / (10 ^

A candidate is randomly selected, with probabilities proportional to payoffs. Because of this, when using

In

The revising agent will consider adopting the other candidate strategy only if the other candidate's payoff is higher than his own, and he will actually adopt it with probability proportional to the payoff difference. In order to turn the difference in payoffs into a meaningful probability, we divide the payoff difference by the maximum possible payoff minus the minimum possible payoff.

In

The revising agent will adopt the other candidate strategy with probability equal to the difference between the maximum possible payoff and his own payoff, divided by the maximum possible payoff minus the minimum possible payoff. (The other candidate's payoff is ignored.)

In

The revising agent will adopt the other candidate strategy with probability equal to the difference between the other candidate's payoff and the minimum possible payoff, divided by the maximum possible payoff minus the minimum possible payoff. (The revising agent's payoff is ignored.)

How to save and load parameter files

Clicking on the button labeled , a window pops up to allow the user to save all parameter values in a .csv file.

Clicking on the button labeled , a window pops up to allow the user to load a parameter file in the same format as it is saved by Abed-1pop.

How to use it

Running the model

Once the model is parameterized (see Parameters section), click on the button labeled to initialize everything. Then, click on to run the model indefinitely (until you click on again). A click on runs the model one tick only.

The value of all parameters can be changed at runtime with immediate effect on the model

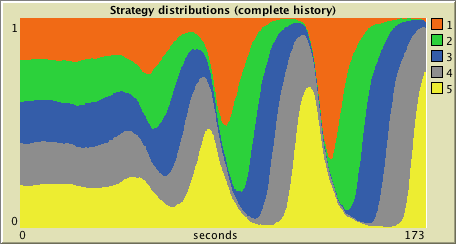

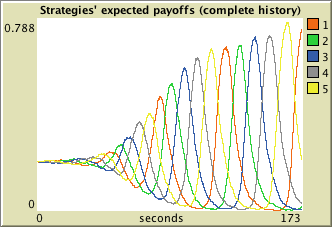

Plots and monitors

All plots in Abed-1pop show units of time in the horizontal axis. These are called seconds and milliseconds for simplicity, but they could be called in any other way. One second is defined as the number of ticks over which the expected number of revisions equals the total number of agents. This number of ticks per second is called

and is shown in a monitor. The other monitor in Abed-1pop shows the

that have been executed. Plots are updated every

There are two plots to show the strategy distribution in the population of agents as ticks go by, like the one below:

and two other plots to show the expected payoff of each strategy as ticks go by, like the one below:

Each of the two types of plots described above comes in two different flavors: one that shows the data from the beginning of the simulation (labeled complete history and active if

License

Abed-1pop (Agent-Based Evolutionary Dynamics in 1 population) is a modeling framework designed to simulate the evolution of a population of agents who play a symmetric 2-player game and, from time to time, are given the opportunity to revise their strategy.

Copyright (C) 2017 Luis R. Izquierdo, Segismundo S. Izquierdo & Bill Sandholm

This program is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3 of the License, or (at your option) any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You can download a copy of the GNU General Public License by clicking here; you can also get a printed copy writing to the Free Software Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301, USA.

Contact information:

Luis R. Izquierdo

University of Burgos, Spain.

e-mail: lrizquierdo@ubu.es

Extending the model

You are most welcome to extend the model as you please, either privately or by creating a branch in our Github repository. If you’d like to see any specific feature implemented but you don’t know how to go about it, do feel free to email us and we’ll do our best to make it happen.

Acknowledgements

Financial support from the U.S. National Science Foundation, the U.S. Army Research Office, the Spanish Ministry of Economy and Competitiveness, and the Spanish Ministry of Science and Innovation is gratefully acknowledged.

References

Sandholm, W. H. (2010). Population Games and Evolutionary Dynamics. MIT Press, Cambridge.

Weibull, J. W. (1995). Evolutionary Game Theory. MIT Press, Cambridge.

How to cite

Izquierdo, L.R., Izquierdo, S.S. & Sandholm, W.H. (2019). An Introduction to ABED: Agent-Based Simulation of Evolutionary Game Dynamics. Games and Economic Behavior, 118, pp. 434-462.

[Download at publishers's site] | [Working Paper] | [Online appendix] | [Presentation]