To use reinforcement-learning-2x2, you will have to install NetLogo 5.3.1 (free and open source) and download the model itself. Unzip the downloaded file and click on reinforcement-learning-2x2.nlogo

reinforcement-learning-2x2 is an agent-based model where two reinforcement learners play a 2x2 game. Reinforcement learners use their experience to choose or avoid certain actions based on the observed consequences. Actions that led to satisfactory outcomes (i.e. outcomes that met or exceeded aspirations) in the past tend to be repeated in the future, whereas choices that led to unsatisfactory experiences are avoided.

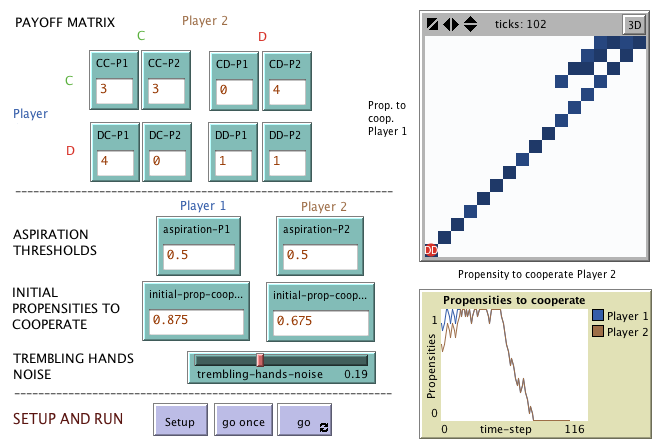

In this model there are two reinforcement learners playing a 2x2 game repeatedly. Each player r (r = 1,2) has a certain propensity to cooperate p(r,C) and a certain propensity to defect p(r,D); these propensities are always multiples of 1/(world-height – 1) for player 1 and multiples of 1/(world-width – 1) for player 2. In the absence of noise, players cooperate with probability p(r,C) and defect with probability p(r,D), but they may also suffer from "trembling hands", i.e. after having decided which action to undertake, each player r may select the wrong action with probability trembling-hands-noise.

The revision of propensities takes place following a reinforcement learning approach: players increase their propensity of undertaking a certain action if it led to payoffs above their aspiration level A(r), and decrease this propensity otherwise. Specifically, if a player r receives a payoff greater or equal to her aspiration threshold A(r), she increments the propensity of conducting the selected action in 1/(world-height – 1) if r = 1 or in 1/(world-width – 1) if r = 2 (within the natural limits of probabilities). Otherwise she decreases this propensity in the same quantity. The updated propensity for the action not selected derives from the constraint that propensities must add up to one.

The view is used here to represent players' propensities to cooperate, with player 1's propensity to cooperate in the vertical axis and player 2's propensity to cooperate in the horizontal axis. The red circle represents the current state of the system and its label (CC, CD, DC, or DD) denotes the last outcome that occurred. Patches are coloured in shades of blue according to the number of times that the system has visited the state they represent: the higher the number of visits, the darker the shade of blue. The plot beneath the representation of the state space shows the time series of both players' propensity to cooperate.

reinforcement-learning-2x2 is an agent-based model where two reinforcement learners play a 2x2 game.

Copyright (C) 2008 Luis R. Izquierdo & Segismundo S. Izquierdo

This program is free software; you can redistribute it and/or modify it under the terms of the GNU General Public License as published by the Free Software Foundation; either version 3 of the License, or (at your option) any later version.

This program is distributed in the hope that it will be useful, but WITHOUT ANY WARRANTY; without even the implied warranty of MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the GNU General Public License for more details.

You can download a copy of the GNU General Public License by clicking here; you can also get a printed copy writing to the Free Software Foundation, Inc., 51 Franklin Street, Fifth Floor, Boston, MA 02110-1301, USA.

Contact information:

Luis R. Izquierdo

University of Burgos, Spain.

e-mail: lrizquierdo@ubu.es

This program has been designed and implemented by Luis R. Izquierdo & Segismundo S. Izquierdo.